Wireless communications have been expanding globally at an exponential rate. The latest imbedded version of mobile networking technology is called 4G (fourth generation), and the next version (called 5G- fifth generation) is in the early implementation stage. Neither 4G nor 5G have been tested for safety in credible real-life scenarios. Alarmingly, many of the studies conducted in more benign environments show harmful effects from this radiation. The present article overviews the medical and biological studies that have been performed to date relative to effects from wireless radiation, and shows why these studies are deficient relative to safety. However, even in the absence of the missing real-life components such as toxic chemicals and biotoxins (which tend to exacerbate the adverse effects of the wireless radiation), the literature shows there is much valid reason for concern about potential adverse health effects from both 4G and 5G technology. The studies on wireless radiation health effects reported in the literature should be viewed as extremely conservative, substantially underestimating the adverse impacts of this new technology.

2. Wireless radiation/electromagnetic spectrum

This section overviews the electromagnetic spectrum, and delineates the parts of the spectrum on which this article will focus. The electromagnetic spectrum encompasses the entire span of electromagnetic radiation, including: • ionizing radiation (gamma rays, x-rays, and the extreme ultraviolet, with wavelengths below ∼10−7 m and frequencies above ∼3 × 1015 Hz); • non-ionizing visible radiation (wavelengths from ∼4 × 10−7 m to ∼7 × 10−7 m and frequencies between ∼4.2 × 1014 Hz and ∼7.7 × 1014 Hz); • non-ionizing non-visible radiation short wavelength radio waves and microwaves, with wavelengths between ∼10−3 m and ∼105 m and frequencies between ∼3 × 1011 to ∼3 × 103 Hz; long wavelengths, ranging between ∼105 m and ∼108 m and frequencies ranging between 3 × 103 and 3 Hz. How are these frequencies used in practice? • The low frequencies (3 Hz – 300 KHz) are used for electrical power line transmission (60 Hz in the U.S.) as well as maritime and submarine navigation and communications. • Medium frequencies (300 KHz–900 MHz) are used for AM/FM/TV broadcasts in North America. • Lower microwave frequencies (900 MHz – 5 GHz) are used for telecommunications such as microwave devices/communications, radio astronomy, mobile/cell phones, and wireless LANs. • Higher microwave frequencies (5 GHz – 300 GHz) are used for radar and proposed for microwave WiFi, and will be used for high-performance 5 G. • Terahertz frequencies (300 GHz – 3000 GHz) are used increasingly for imaging to supplement X-rays in some medical and security scanning applications (Kostoff and Lau, 2017). In the present study of wireless radiation health effects, the frequency spectrum ranging from 3 Hz to 300 GHz is covered, with particular emphasis on the high frequency communications component ranging from ∼1 GHz to ∼300 GHz. Why was this part of the spectrum selected? Previous reviews of wireless radiation health effects found that pulsed electromagnetic fields (PEMF) applied for relatively short periods of time could sometimes be used for therapeutic purposes, whereas chronic exposure to electromagnetic fields (EMF) in the power frequency range (∼60 Hz) and microwave frequency range (∼1 GHztens GHz) tended to result in detrimental health effects (Kostoff and Lau, 2013, 2017). Given present concerns about the rapid expansion of 5G communications systems (which are projected to use mainly the higher microwave frequencies part of the spectrum in the highest performance (aka high-band) mode) in the absence of adequate and rigorous safety testing, more emphasis will be placed on the communications frequencies in this document.

3. Modern wireless radiation exposures

In ancient times, sunlight and its lunar reflections provided the bulk of the visible spectrum for human beings (with fire a distant second and lightning a more distant third). Now, many varieties of artificial light (incandescent, fluorescent, and light emitting diode) have replaced the sun as the main supplier of visible radiation during waking hours. Additionally, EMF radiations from other parts of the non-ionizing nonvisible spectrum have become ubiquitous in daily life, such as from wireless computing and telecommunications. In the last two or three decades, the explosive growth in the cellular telephone industry has placed many residences in metropolitan areas within less than a mile of a cell tower. Future implementation of the next generation of mobile networking technology, 5 G, will increase the cell tower densities by an order of magnitude. Health concerns have been raised about wireless radiation from (1) mobile communication devices, (2) occupational exposure, (3) residential exposure, (4) wireless networks in homes, businesses, and schools, (5) automotive radar, and (6) other non-ionizing EMF radiation sources, such as ‘smart meters’ and ‘Internet of Things’.

4. Demonstrated biological and health effects from prior generations of wireless networking technology

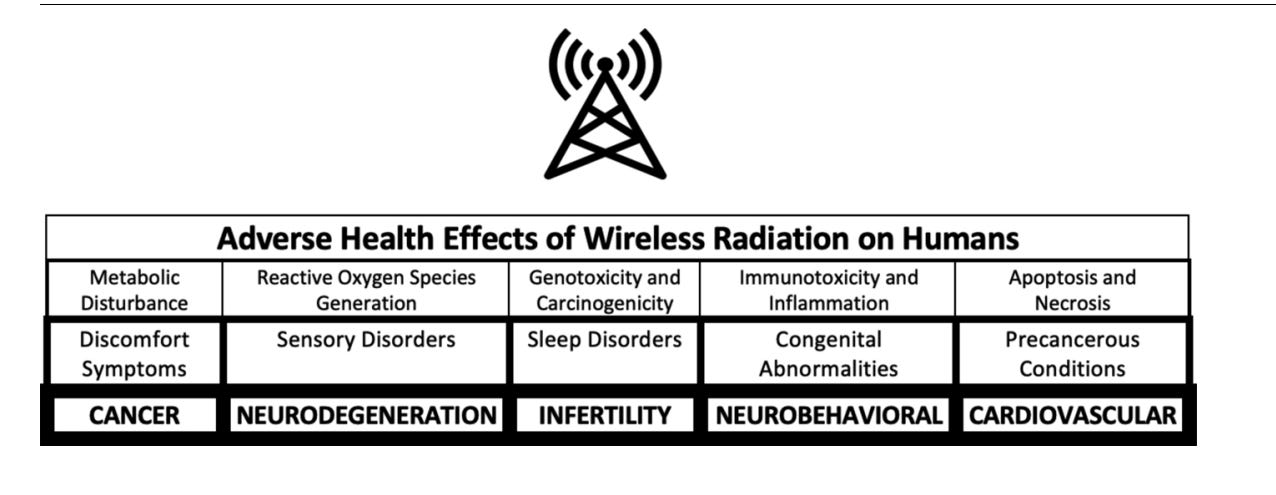

There have been two major types of studies performed to ascertain biological and health effects of wireless radiation: laboratory and epidemiology. The laboratory tests performed provided the best scientific understanding of the effects of wireless radiation, but did not reflect the real-life environment in which wireless radiation systems operate (exposure to toxic chemicals, biotoxins, other forms of toxic radiation, etc). There are three main reasons the laboratory tests failed to reflect reallife exposure conditions for human beings. First, the laboratory tests have been performed mainly on animals, especially rats and mice. Because of physiological differences between small animals and human beings, there have been continual concerns about extrapolating small animal results to human beings. Additionally, while inhaled or ingested substances can be scaled from laboratory experiments on small animals to human beings relatively straight-forwardly, radiation may be more problematic. For non-ionizing radiation, penetration depth is a function of frequency, tissue, and other parameters. Radiation could penetrate much deeper into a small animal’s interior than similar wavelength radiation in humans, because of the much smaller animal size. Different organs and tissues would be affected, with different levels of power density. Second, the typical incoming EMF signal for many/most laboratory tests performed in the past consisted of single carrier wave frequency; the lower frequency superimposed signal containing the information was not always included. This omission may be important. As Panagopoulos states: “It is important to note that except for the RF/ microwave carrier frequency, Extremely Low Frequencies - ELFs (0–3000 Hz) are always present in all telecommunication EMFs in the form of pulsing and modulation. There is significant evidence indicating that the effects of telecommunication EMFs on living organisms are mainly due to the included ELFs…. While ∼50 % of the studies employing simulated exposures do not find any effects, studies employing real-life exposures from commercially available devices display an almost 100 % consistency in showing adverse effects” (Panagopoulos, 2019). These effects may be exacerbated further with 5 G: “with every new generation of telecommunication devices…..the amount of information transmitted each moment…..is increased, resulting in higher variability and complexity of the signals with the living cells/ organisms even more unable to adapt” (Panogopoulos, 2019). Third, these laboratory experiments typically involved one stressor (toxic stimulus) and were performed under pristine conditions. This contradicts real-life exposures, where humans are exposed to multiple toxic stimuli, in parallel or over time (Tsatsakis et al., 2016, 2017; Docea et al., 2019a). In perhaps five percent of the cases reported in the wireless radiation literature, a second stressor (mainly a biological or chemical toxic stimulus) was added to the wireless radiation stressor, to ascertain whether additive, synergistic, potentiative, or antagonistic effects were generated by the combination (Kostoff and Lau, 2013, 2017; Juutilainen, 2008; Juutilainen et al., 2006). Combination experiments are extremely important because, when other toxic stimuli are considered in combination either with each other or with wireless radiation, the synergies tend to enhance the adverse effects of each stimulus in isolation. This was shown in several studies that evaluated the cumulative effects of chronic exposure to low doses of xenobiotics in combination (Kostoff et al., 2018; Docea et al., 2018; Tsatsakis et al., 2019a; Docea et al., 2019b; Tsatsakis et al., 2019b, c; Fountoucidou et al., 2019). For those combinations that include wireless radiation, combined exposure to toxic stimuli and wireless radiation translates into much lower levels of tolerance for each toxic stimulus in the combination relative to its exposure levels that produce adverse effects in isolation. Accordingly, the exposure limits for wireless radiation when examined in combination with other potentially toxic stimuli would be far lower for safety purposes than those derived from wireless radiation exposures in isolation. Thus, almost all of the wireless radiation laboratory experiments that have been performed to date are flawed/limited with respect to showing the full adverse impact of the wireless radiation that would be expected under real-life conditions. Either 1) non-inclusion of signal information or 2) using single stressors only tends to underestimate the seriousness of the adverse effects from wireless radiation. Excluding both of these phenomena from experiments, as was done in the vast majority of the reported wireless radiation health effects studies, tends to amplify this underestimation substantially. Thus, the results reported in the biomedical literature should be viewed as 1) extremely conservative and 2) the very low ‘floor’ of the seriousness of the adverse effects from wireless radiation, not the ‘ceiling’. In contrast to the controlled pristine environments that characterize the wireless radiation animal laboratory experiments, the wireless radiation epidemiology studies carried out to date typically involved human beings who had been subjected to myriad known and unknown stressors prior to (and during) the study. The real-life human exposure levels from cell tower studies (reported by Kostoff and Lau (2017)) that showed increased cancer incidence were orders of magnitude lower than those exposure levels generated in the recent highly-funded National Toxicology Program animal laboratory studies (Melnick, 2019). We believe the inclusion of real-world effects in the cell tower studies accounted for the orders of magnitude exposure level decreases that were associated with the increased cancer incidence. The laboratory tests were conducted under controlled conditions not reflective of reallife, while the epidemiology studies were performed in the presence of many stressors, known and unknown, reflective of real-life. The myriad toxic stimuli exposure levels of the epidemiology studies were, for the most part, uncontrolled. A vast literature published over the past sixty years shows adverse effects from wireless radiation applied in isolation or as part of a combination with other toxic stimuli. Extensive reviews of wireless radiation-induced biological and health effects have been published (Kostoff and Lau, 2013, 2017; Belpomme et al., 2018; Desai et al., 2009; Di Ciaula, 2018; Doyon and Johansson, 2017; Havas, 2017; Kaplan et al., 2016; Lerchl et al., 2015; Levitt and Lai, 2010; Miller et al., 2019; Pall, 2016, 2018; Panagopoulos, 2019; Panagopoulos et al., 2015; Russell, 2018; Sage and Burgio, 2018; van Rongen et al., 2009; Yakymenko et al., 2016; Bioinitiative, 2012). In aggregate, for the high frequency (radiofrequency-RF) part of the spectrum, these reviews show that RF radiation below the FCC guidelines can result in:

• carcinogenicity (brain tumors/glioma, breast cancer, acoustic neuromas, leukemia, parotid gland tumors),

• genotoxicity (DNA damage, DNA repair inhibition, chromatin structure),

• mutagenicity, teratogenicity,

• neurodegenerative diseases (Alzheimer’s Disease, Amyotrophic Lateral Sclerosis),

• neurobehavioral problems, autism, reproductive problems, pregnancy outcomes, excessive reactive oxygen species/oxidative stress, inflammation, apoptosis, blood-brain barrier disruption, pineal gland/melatonin production, sleep disturbance, headache, irritability, fatigue, concentration difficulties, depression, dizziness, tinnitus, burning and flushed skin, digestive disturbance, tremor, cardiac irregularities,

• adverse impacts on the neural, circulatory, immune, endocrine, and skeletal systems

From this perspective, RF is a highly pervasive cause of disease! The response from industry has been that no mechanism could explain the biological action of non-thermal and non-ionizing EM fields. Yet, reports of clear perturbations of biological systems at levels near or even below 1000 μW/m² (Bioinitiaive, 2019) were explained by perturbations in electron and proton transfers supporting ATP production in mitochondria (Sanders et al., 1980; 1985) exposed to RF or ELF signals (Li and Heroux, 2014). To obtain another perspective on the full spectrum of adverse effects from wireless radiation, a query was run on Medline to retrieve representative records associated with adverse EMF effects (mainly, but not solely, RF). Over 5400 records were retrieved, and the leading Medical Subject Headings (MeSH) extracted. The categories of adverse impacts from both approaches match quite well. The adverse health effects range from myriad feelings of discomfort to life-threatening diseases. The full list of MeSH Headings associated with this retrieval is shown in Appendix 1 of (Kostoff, 2019). The interested reader can ascertain what other diseases/symptoms were included. The 5400+ references retrieved are shown in Appendix 2 of (Kostoff, 2019).

5. What types of biological and health effects can be expected from 5G wireless networking technology?

The potential 5G adverse effects derive from the intrinsic nature of the radiation, and its interaction with tissue and target structures. 4G networking technology was associated mainly with carrier frequencies in the range of ∼1-2.5 GHz (cell phones, WiFi). The wavelength of 1 GHz radiation is 30 cm, and the penetration depth in human tissue is a few centimeters. In its highest performance (high-band) mode, 5G networking technology is mainly associated with carrier frequencies at least an order of magnitude greater than the 4G frequencies, although, as stated previously, “ELFs (0–3000 Hz) are always present in all telecommunication EMFs in the form of pulsing and modulation”. Penetration depths for the carrier frequency component of high-band 5G wireless radiation will be on the order of a few millimeters (Alekseev et al., 2008a, b). At these wavelengths, one can expect resonance phenomena with small-scale human structures (Betzalel et al., 2018). Additionally, numerical simulations of millimeter-wave radiation resonances with insects showed a general increase in absorbed RF power at and above 6 GHz, in comparison to the absorbed RF power below 6 GHz. A shift of 10 % of the incident power density to frequencies above 6 GHz was predicted to lead to an increase in absorbed power between 3–370 % (Thielens et al., 2018). The common ‘wisdom’ presented in the literature and media is that, if there are adverse impacts resulting from high-band 5 G, the main impacts will be focused on near-surface phenomena, such as skin cancer, cataracts, and other skin conditions. However, there is evidence that biological responses to millimeter-wave irradiation can be initiated within the skin, and the subsequent systemic signaling in the skin can result in physiological effects on the nervous system, heart, and immune system (Russell, 2018). Additionally, consider the following reference (Zalyubovskaya, 1977). This is one of many translations of articles produced in the Former Soviet Union on wireless radiation (also, see reviews of Soviet research on this topic by McRee (1979, 1980), Kositsky et al. (2001), and Glaser and Dodge (1976)). On p. 57 of the pdf link, the article by Zalyubovskaya addresses biological effects of millimeter radiowaves. Zalyubovskaya ran experiments using power fluxes of 10,000,000 μW/ square meter (the FCC (Federal Communications Commission) guideline limit for the general public today in the USA), and frequencies on the order of 60 GHz. Not only was skin impacted adversely, but also heart, liver, kidney, spleen tissue as well, and blood and bone marrow properties. These results reinforce the conclusion of Russel (quoted above) that systemic results may occur from millimeter-wave radiation. To re-emphasize, for Zalyubovskaya’s experiments, the incoming signal was unmodulated carrier frequency only, and the experiment was single stressor only. Thus, the expected real-world results (when human beings are impacted, the signals are pulsed and modulated, and there is exposure to many toxic stimuli) would be far more serious and would be initiated at lower (perhaps much lower) wireless radiation power fluxes. The Zalyubovskaya paper was published in 1977. The referenced version was classified in 1977 by USA authorities and declassified in 2012. What national security concerns caused it (and the other papers in the linked pdf reference) to be classified for 35 years, until declassification in 2012? Other papers on this topic with similar findings were published in the USSR (and the USA) at that time, or even earlier, but many never saw the light of day, both in the USSR and the USA. It appears that the potentially damaging effects of millimeter-wave radiation on the skin (and other major systems in the body) have been recognized for well over forty years, yet today’s discourse only revolves around the possibility of modest potential effects on the skin and perhaps cataracts from millimeter-wave wireless radiation.

6. What is the consensus on adverse effects from wireless radiation?

Not all studies of wireless radiation have shown adverse effects. For example, consider potential genotoxic effects of mobile phone radiation. A study investigating “the effect of mobile phone use on genomic instability of the human oral cavity's mucosa cells” concluded “Mobile phone use did not lead to a significantly increased frequency of micronuclei” (Hintzsche and Stopper, 2010). Conversely, a 2017 study investigated buccal cell preparations for genomic instability, and found “The frequency of micronuclei (13.66x), nuclear buds (2.57x), basal (1.34x), karyorrhectic (1.26x), karyolytic (2.44x), pyknotic (1.77x) and condensed chromatin (2.08x) cells were highly significantly (p = 0.000) increased in mobile phone users” (Gandhi et al., 2017). Also, a 2017 study to ascertain the “effect of cell phone emitted radiations on the orofacial structures” concluded that “Cell phone emitted radiation causes nuclear abnormalities of the oral mucosal cells” (Mishra et al., 2017). Further, a 2016 study to “explore the effects of mobile phone radiation on the MN frequency in oral mucosal cells” concluded “The number of micronucleated cells/1000 exfoliated buccal mucosal cells was found to be significantly increased in high mobile phone users group than the low mobile phone users group” (Banerjee et al., 2016). Finally, a study aimed at investigating the health effects of WiFi exposure concluded “long term exposure to WiFi may lead to adverse effects such as neurodegenerative diseases as observed by a significant alteration on AChE gene expression and some neurobehavioral parameters associated with brain damage” (Obajuluwa et al., 2017). There are many possible reasons to explain this lack of consensus.

1) There may be ‘windows’ in parameter space where adverse effects occur, and operation outside these windows would show a) no effects or b) hormetic effects or c) therapeutic effects. For example, if information content of the signal is a strong contributor to adverse health effects (Panagopoulus, 2019), then experiments that involve only the carrier frequencies may be outside the window where adverse health effects occur. Alternatively, in this specific example, the carrier signal and the information signal could be viewed as a combination of potentially toxic stimuli, where the adverse effects of each component are enabled because of the synergistic effects of the combination.

As another example, an adverse health impact on one strain of rodent was shown for a combination of 50 Hz EMF and DMBA, while no adverse health impact was shown on another rodent strain for the same toxic stimuli combination (Fedrowitz et al., 2004). From a higher-order combination perspective, if genetic abnormalities/differences are viewed conceptually as potentially equivalent to a toxic stimulus for combination purposes, then a synergistic three-constituent combination of 50 Hz EMF, DMBA, and genetics was required to produce adverse health impacts in the above experiment. If these results can be extrapolated across species, then human beings could exhibit different responses to the same electromagnetic stimuli based on their unique genetic predispositions (Caccamo et al., 2013; De Luca et al., 2014).

1) Research quality could be poor, and adverse effects were overlooked. 2) Or, the research team could have had a preconceived agenda, where finding no adverse effects from wireless radiation was THE objective of the study. For example, studies have shown that industry-funded research of wireless radiation adverse health effects is far more likely to show no effects than funding from non-industry sources (Huss et al., 2007; Slesin, 2006; Carpenter, 2019). Studies in disciplines other than wireless radiation have shown that, for products of high military, commercial, and political sensitivity, ‘researchers’/ organizations are hired to publish articles that conflict with the credible science, and therefore create doubt as to whether the product of interest is harmful (Michaels, 2008; Oreskes and Conway, 2011). Unfortunately, given the strong dependence of the civilian and military economies on wireless radiation, incentives for identifying adverse health effects from wireless radiation are minimal and disincentives are many. These perverse incentives apply not only to the sponsors of research and development, but to the performers as well.

Even the Gold Standard for research credibility - independent replication of research results - is questionable in politically, commercially, and militarily sensitive areas like wireless radiation safety, where the accelerated implementation goals of most wireless radiation research sponsors (government and industry) are aligned. It is imperative that highly objective evaluators with minimal conflicts of interest play a central role ensuring that rigorous safety standards for wireless radiation systems are met before widescale implementation is allowed.

7. Conclusions

Wireless radiation offers the promise of improved remote sensing, improved communications and data transfer, and improved connectivity. Unfortunately, there is a large body of data from laboratory and epidemiological studies showing that previous and present generations of wireless networking technology have significant adverse health impacts. Much of this data was obtained under conditions not reflective of real-life. When real-life considerations are added, such as 1) including the information content of signals along with 2) the carrier frequencies, and 3) including other toxic stimuli in combination with the wireless radiation, the adverse effects associated with wireless radiation are increased substantially. Superimposing 5G radiation on an already imbedded toxic wireless radiation environment will exacerbate the adverse health effects shown to exist. Far more research and testing of potential 5G health effects under real-life conditions is required before further rollout can be justified.

Transparency document

The Transparency document associated with this article can be found in the online version. Declaration of Competing Interest The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

https://www.aristsatsakis.com/images/stories/PdFs/2020/Adverse%20health%20effects%20of%205G%20mobile%20networking%20technology%20under%20real-life%20conditions.pdf

I've archived this as a precaution - you never know.....

https://tinyurl.com/s3474a6s

https://www.sciencedirect.com/science/article/abs/pii/S037842742030028X?via%3Dihub

*************************************************************************************************************

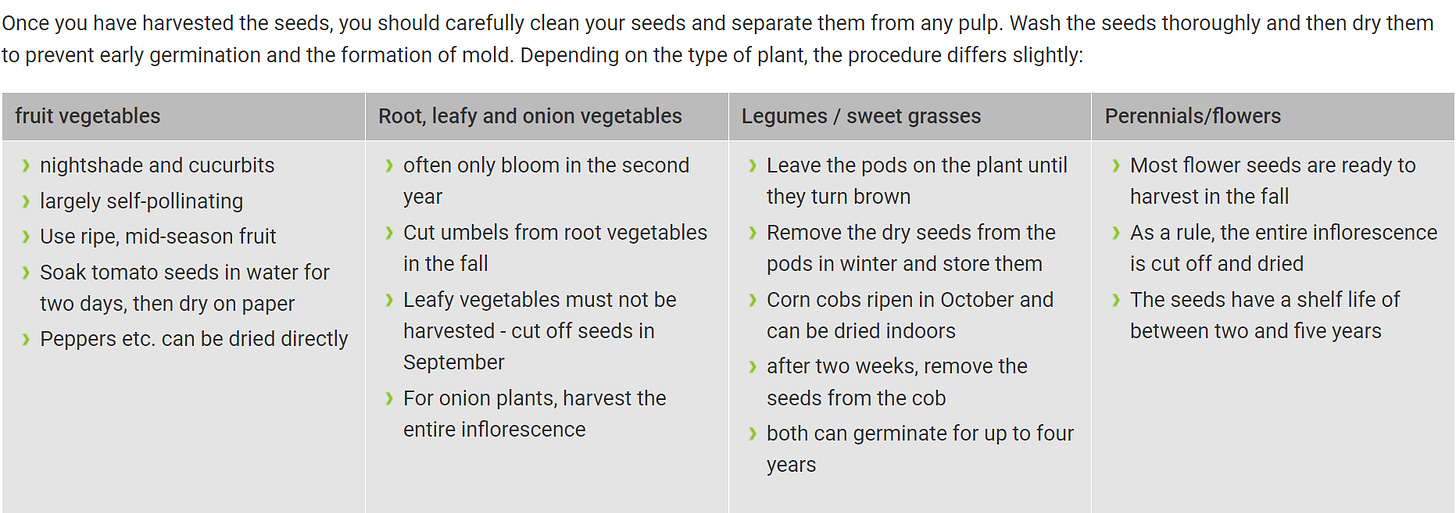

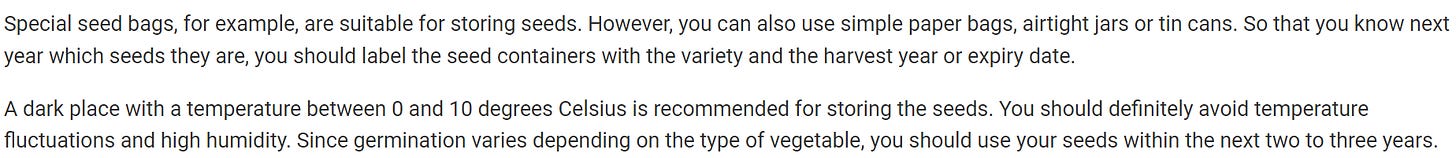

Now I am absent again for some time(but check my mails from time to time to read interesting articles) as we have been harvesting seeds for some weeks now - we have been doing this since 2020 - and of course it is getting more and more as we have now increased our community to about 40,000 - and of course we are supporting people to be able to do this work themselves - in case one or the other would like to try it themselves, here are a few tips:

For salad:

To harvest lettuce seeds, allow a few vigorous plants to flower. After 12 to 24 days of ripening, pick the dry fruit clusters and carefully remove the seeds with tweezers. Store the seeds in a cloth bag to prevent rotting.

***********************************************************************************************************

I wish you all continued good luck with all my heart and stay strong!!❤️

This is great, thank you!

That is one highly referenced, deeply researched piece. Definitely one for sharing. Thank you